Machine Generated Art

In 1995, IEI began experimenting with text-to-image, as well as image-to-image transformations using multilayer perceptrons and deep nets. Although these efforts were highly proprietary in nature, the most interesting and well publicized efforts were focused on what happened when the input patterns to these nets were pinned at constant values and internal disturbances were introduced to the net's connections. We found that at a particular range of disturbance level, a 'Goldilocks zone' if you will, these nets began to output novel images that were very reminiscent of their original training sets. In other words, the nets were generating very plausible one- and two-off variations on what they had previously learned, without recourse to complex human-conceived algorithms!

Thereafter, this is how events unfolded...

1998, Creativity Machine vs Genetic Algorithm

IEI's first application of Creativity Machines in the generation of art came in 1997 with the generation of hypothetical portraits. Our patented neural systems were pitted against genetic algorithms to demonstrate the relative ease with which they produced novel faces. As long as their generator nets were subjected to a specific level of synaptic damage (i.e., the above-mentioned Goldilocks zone), they produced face-like imagery. To right, for instance the GA struggles to produce a single face while the Creativity Machine successfully originates one face after another near a mean synaptic damage level of 0.06.After detuning the CM through excess mean synaptic damage, it too begins to output nonsense.

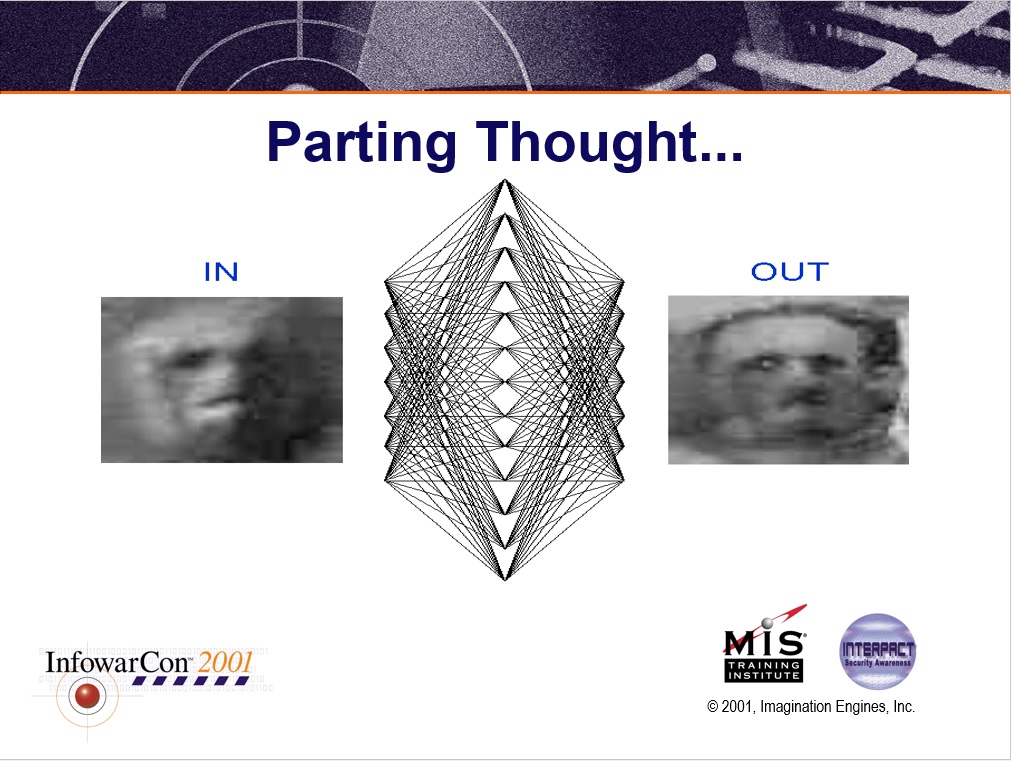

2001, Fascists in Space!

At the conclusion of an invited presentation at Infowarcon 2001 in Washington, DC, our founder shows what happens when the pattern input to a Creativity Machine's generator net is a well-known natural terrain feature on Mars. Through pareidolia the net perceives that it is looking at a fascist dictator decked out in a space suit!This demonstration predates similar, well-publicized efforts in 2013 in which small patches of a scene were converted to alternative imagery. Though just light entertainment following a presentation on 'twisted' cyber technologies, it suggested how perception in both humans and AI could be easily manipulated. Soon after, various government agencies offered contracts, achieving the same in both imagery and natural language generation.

2001, More Face Generation

Likewise in 2001, using dual core Pentium II processors, we were able to push the technology to generate faces having a quarter million grayscale pixels. The training exemplars for the generator were constructed from a police sketch artist package. The sequence to right shows a progression of faces generated by the Creativity Machine, all of which are distinct from any portraits contained in the training set.2012, DABUS Face Generation in Color

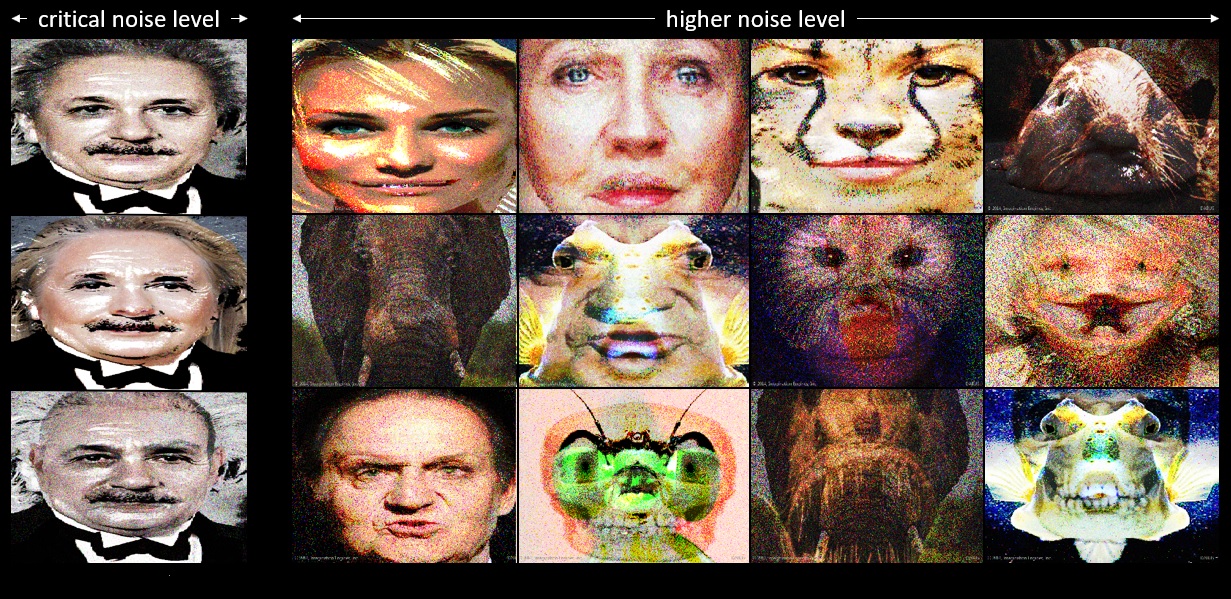

Ironically, the main drawback to using the Creativity Machine to generate faces from a deck of human portraits was that the resulting faces weren't that creative. To be more precise, the generated portraits tended to resemble training patterns, with some minor, but plausible pixel variations. With the invention of DABUS systems, having thousands of artificial neural nets, this advanced form of Creativity Machine was able to hybridize human faces with animals, as well as various inanimate objects to create more whimsical imagery.In the figure below, we see what happens when DABUS is run at various levels of synaptic disturbance. In the low noise regime, the system slightly hybridizes and mutates stored memories of faces, for example producing imaginary members of the Einstein clan (left). Then progressively raising the perturbation level, much greater diversity is seen wherein hybridization occurs between human and non-human faces.

Interesting to note that DABUS was allowed to carry out image-to-text processing wherein many of these hybridized faces became associated with their autonomously generated captions. For instance, DABUS named the fictitious Einsteins Bruce, Hillary, and Curly, respectively from top to bottom!

2012-2017, DABUS Fantasy Scene Generation

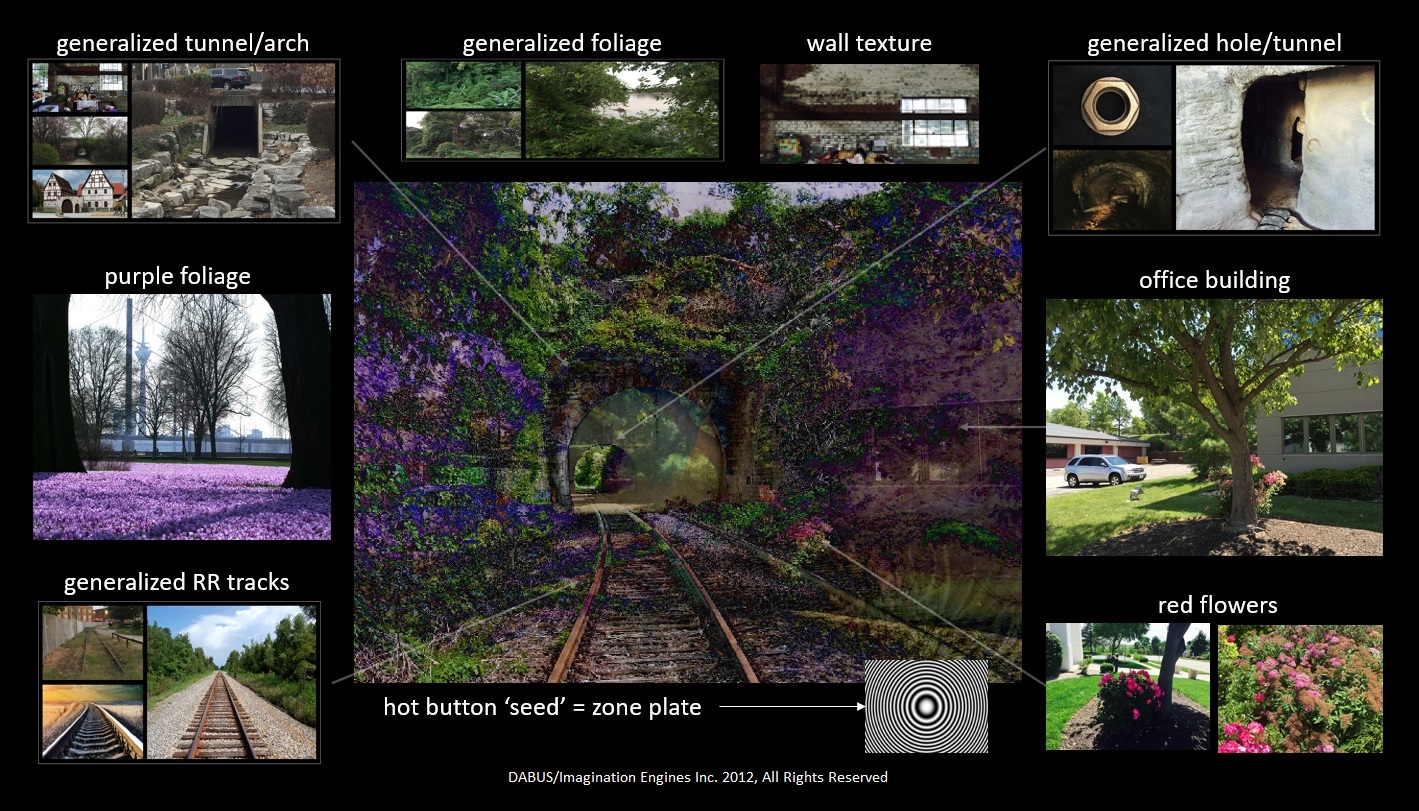

By 2012, DABUS had seen thousands of photographs and was able to synthesize many new imaginary scenes. Note that these were full 640x480 pixel, 24 bit depth renderings and not attempts at replacing small patches of existing photos with substitute imagery. In other words, each of these images formed in parallel. Furthermore, the system cumulative learned what appealed most to it, thereafter generating many variations on its favorite themes.

2014: Basement Cleanup

Generated and named by DABUS.

2014: Industrial Paradise

Generated and named by DABUS.

2014: Locomotive Bubble Bath

Generated and named by DABUS.

2014: Sidewalk Beggar

Generated and named by DABUS.

2015: Fish Dream

Generated and named by DABUS.

2017: At Your Own Risk

Generated and named by DABUS.

2012, DABUS Trauma Imagery

Out of pure curiosity we allowed the random snipping of connections within DABUS to simulate a dying brain, thus generating some very interesting imagery. The system was also able to name the resulting artwork, at least at the early stages of neural destruction. Copyright notices were added to them in 2016 as these and more trauma-induced images appeared in Urbasm under the title, "Artificial Intelligence – Visions (Art) of a Dying Synthetic Brain."

2012: Recent Entrance

2012: Image with generated title.

2012: Guardian Greeter

2012: Image with generated title.

2012: No Tranquility at All

2012: Image with generated title.

2012: Retired Hell Admin Bldg

2012: Image with generated title.

Below we see some of the many elements contributing to the trauma-generated "A Recent Entrance to Paradise," most importantly the zone plate image which acts as a so-called "hot button" to initiate simulated neurotransmitter surge and the selective reinforcement of the entire chain of neural nets whose cleverly merged memories constitute this image. In effect, the zone plate became an impactful, artificially introduced memory, tantamount to recollections of existential events stored in our brains (i.e., falling, hunger, thirst, satiation, etc.). In humans, such hot button memories are congenital and acquired through generations of Darwinian natural selection.

2014, DABUS Free Run

DABUS was allowed free run, the equivalent of what humans might call free association. Below, one may see a stream of internal imagery with scenes gracefully blending into the next. Sometimes, more radical shifts occur.

So, make no mistake. These works of art are not the products of deep learning or adversarial nets. They were generated by Creativity Machines and DABUS long before these technologies, with one image in particular, "A Recent Entrance to Paradise," forming the basis of legal debates around the planet as to whether AI systems may own the the copyrights to the art they create.

Most important to realize is that DABUS is not being used as a tool to assist human artists. What emerges from this system are the thoughts of an autonomous and feeling machine intelligence!